Running the Grid, Running the Cloud

What Electricity and Artificial Intelligence Really Have in Common

Every day, we take two modern miracles for granted: the light turns on when we flip the switch, and answers appear when we ask a question online. Electricity powers our homes. Artificial intelligence powers our screens. But beneath their seamless performance lies a shared truth: both depend on enormous, complex systems working constantly—and invisibly—to stay balanced.

Surprisingly, these systems are starting to look more and more alike.

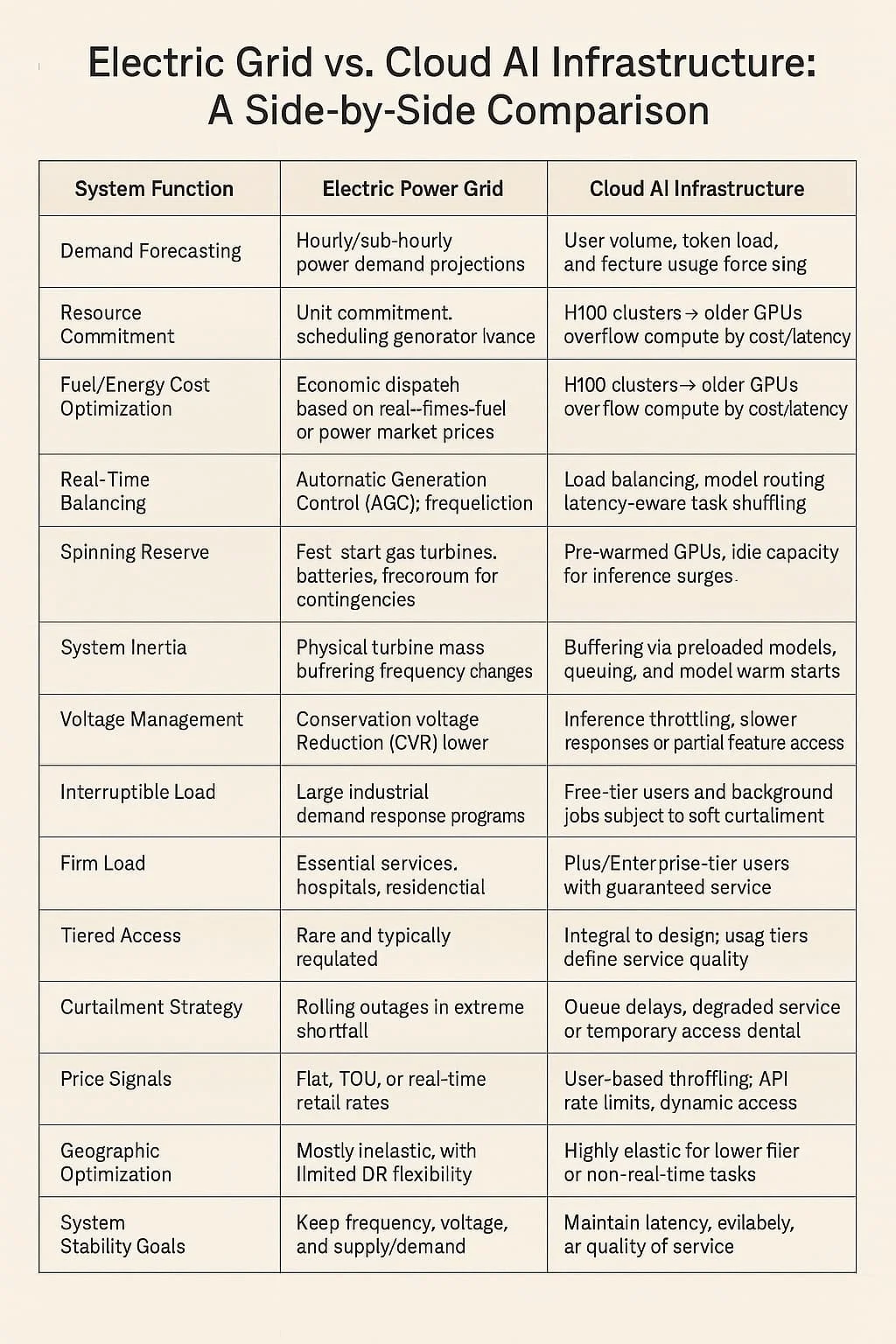

Whether you’re running a power grid or managing cloud-based AI infrastructure, the challenges are strikingly similar: finite resources, unpredictable demand, and the need for unshakable reliability. From how they forecast usage to how they respond to overloads, the invisible logic behind electricity and AI is converging.

Forecasting the Future

Electric utilities build hourly forecasts to predict how much power homes, businesses, and cities will use. These projections guide everything from generator schedules to market bids. Too little power, and the grid risks collapse. Too much, and resources are wasted.

AI systems do the same thing. Engineers model inference demand—how many users will log on, what they'll ask, how long their prompts will be. These forecasts help cloud platforms allocate computing resources across their sprawling networks of servers.

In both cases, good forecasts are the first step toward avoiding failure.

Dispatching Resources: From Generators to GPUs

Power systems are built around the merit order: a dispatch stack where the most efficient resources—like nuclear or hydro—run first. Less efficient ones, like gas turbines, are held in reserve for higher demand. At the top of the stack sit fast-start “peaker” plants, expensive but available when needed.

Cloud systems mirror this exactly. AI tasks are routed first to the fastest, most efficient GPU clusters. If those are saturated, the system spills over into less optimized hardware, secondary regions, or even slower fallback compute pools. The more strained the system, the more expensive and delayed the response.

This is how both systems stretch to meet peak demand—dispatching resources up their respective cost curves.

Staying Stable Under Stress

Unexpected surges—heat waves or viral AI trends—demand fast, flexible response. On the grid, automatic generation control keeps frequency stable by adjusting output from fast-ramping resources. In AI, load balancers and latency-aware routing shift inference tasks to wherever there’s available capacity.

The power grid relies on inertia—the spinning mass of turbines—to absorb shocks. AI platforms use pre-warmed models and idle servers to do the same. Without these buffers, a sudden spike in usage could result in a blackout—or a system crash.

Throttling Instead of Failing

Sometimes, a system can’t fully meet demand—but it can still avoid collapse. Utilities may lower distribution voltage slightly during tight conditions, shaving load without cutting off customers entirely. It’s a quiet sacrifice most people never notice.

AI systems have their own version: inference throttling. During peak load, users might experience:

Slower response times

Delays in image generation or coding features

Temporary unavailability for non-paying users

Rather than shutting down, the system softly degrades. It’s the digital equivalent of dimming the lights.

Tiered Access: A Flexibility the Grid Can’t Afford

Electricity is delivered universally. Everyone gets service, regardless of income or need. The system is built around equality.

Not so in AI. Cloud services are tiered by design:

Free-tier users receive access when the system has capacity

Paid users are prioritized for speed, stability, and features

Complex or compute-intensive tasks may be deferred or denied during congestion

This allows AI infrastructure to implement real-time demand shaping, something power grids have only begun to explore through pilot programs and dynamic pricing.

Fuel, Electricity, and When to Compute

In energy markets, the cost of fuel drives decisions. Utilities must decide which plants to run, and when, based on the price of gas, coal, or power from the market. That cost changes with time of day and season.

Cloud providers now face the same calculus. AI is power-hungry—training a large model or serving millions of queries consumes enormous electricity, often measured in megawatts. The largest cloud providers now:

Shift flexible tasks (like model training or indexing) to times and regions with cheaper power

Enter into long-term power purchase agreements (PPAs) with renewable developers

Explore carbon-aware computing, choosing datacenters based on the cleanliness or cost of local electricity

While real-time inference must be served on demand, many supporting tasks can be scheduled when power is cheapest. It's a direct echo of economic dispatch in the energy world.

Invisible Systems, Shared Challenges

Electricity powers the grid. Intelligence flows through the cloud. But at their core, both systems manage uncertainty, scarcity, and stability under pressure.

As AI datacenters consume more power, and as energy systems grow smarter through machine learning, the line between grid and cloud will blur. One day soon, it may be impossible to tell where one ends and the other begins.

Until then, it’s worth recognizing the quiet brilliance of both: systems that stretch, adapt, and recover—without most of us ever noticing.

This work is licensed under a Creative Commons Attribution 4.0 International License. CC BY 4.0

Feel free to share, adapt, and build upon it — just credit appropriately.